Flesh in the Machine

January 28, 2020essay,

This essay by art historian and media theorist Kris Paulsen is part of a series of essays and artist contributions that together form an interdisciplinary study into how we feel and touch in our technologically mediated, dematerialized digital cultures and how this is expressed in our social and artistic practices. Paulsen looks to the fantasy of bodiless space to see how our bodies were pulled into that place and to see how we might make visible our fleshy capture in immaterial space.

On 8 February 1996, cyberspace was pronounced ‘free’. With all of the bombast of a revolutionary founding father shouting down the old lords, John Perry Barlow, Grateful Dead lyricist turned internet civil liberties activist and founder of the Electronic Frontier Foundation, declared cyberspace ‘a new home of mind’, free from the sovereignty, tyranny and authority of nations. To ‘the governments of the Industrial World, [those] weary giants of flesh and steel’, he declared: ‘Your legal concepts of property, expression, identity, movement and context do not apply to us. They are all based on matter, and there is no matter here.’1 To get into this new world, however, we would have to leave our bodies – our matter – behind. This was the price to pay for liberty, but Barlow and other ‘cyber-utopians’ did not figure it as a great loss. Freedom from the body meant not only freedom from physical coercion and laws, but also from the trappings of race, class, gender and sex, as well as all of the prejudice and privilege attached to those embodied markings and performances. In cyberspace, we could be anything we wanted; we could be anonymous; we could be no one.

Things did not turn out quite as planned. Those who announce freedom for newly colonized territories tend to speak only for (or only even recognize) the few. Beyond the institutionalized privileges of race, sex and class that made such claims imaginable, what has become most apparent in the intervening years, however, is how much our bodies matter to and in cyberspace – where they are, how they are typed, their defining physical landmarks – as well as just how invested the industrial world would be in tracing these contours. What Barlow may not have been able to foresee when he declared the independence of cyberspace was that it would, in turn, become a colonizing force in the physical world, that both nations and corporations would be desperate for our matter and the data it produces. This essay looks to the fantasy of bodiless space to see how our bodies were pulled into that place – as biometric data, as geo-spatial coordinates, as information fuelling a neo-phrenology that seeks not to eliminate the biases hung upon the physical body but to automate their application – and to see how we might make visible our fleshy capture in immaterial space.

Cyberspace Is Fiction

It is no surprise that Barlow’s hopes for an egalitarian and bodiless future in cyberspace would not come to pass. Looking back twenty-four years later, it is evident that these claims were blinkered by privilege. Cyberspace was fiction. Inspired by the sci-fi writings of William Gibson, in which the author imagined a future where ‘console cowboys’ left their bodies slumped at workstations to enter a 3D virtual reality representation of data. In the ‘matrix’ of what he dubbed ‘cyberspace’, Gibson’s users could be information rustlers and data bandits on another plane of existence.2 The intoxication of this immaterial world led users to develop an open hostility to the body and its limitations. Cyberspace is a ‘consensual hallucination’ of ‘bodiless exultation’; the body was mere meat, a prison of flesh.3

Gibson’s fiction became the guiding model for a new reality shaped by a generation of hardware developers and software engineers. The online world was a new frontier, and though strangely empty of ‘natives’, some would be assimilated and acclimated more easily than others. His vision of the future was combined into the primordial soup of the ‘Californian Ideology’, which mixed the ego-centric rationalism of Ayn Rand’s Objectivism with neoliberal economic theory and West Coast countercultural ideologies. They envisioned a new social world that paralleled the new technology and infrastructure. Cyberspace was everywhere and nowhere, and just as the information travelling over the net is separated into packets and reconstituted at its destination, one could imagine identity as similarly fragmented and recombined and reimagined in cyberspace. For many early users, particularly as described by sociologist Sherry Turkle, anonymity and the detachment from physicality constituted the great liberation of the net, as it provided an opportunity to test how all aspects of identity might be performative and fluid.4 In cyberspace, then, the repressive and prejudicial constraints placed on those with marginalized, minority or disempowered identities could be thrown off as users chose how they wished to represent and mark themselves. Cyber-utopians saw this ability to self-determine or to be anonymous as inherently democratic: if no one knew an individual’s race, gender, sex or class then it could not be held against them. All were equal, or to put it otherwise: all could be assumed as white and male. The net did not disrupt hegemony; it merely baited access to it.5 ‘Othered’ identities announced online, new media theorist Lisa Nakamura has shown, became ‘protheses’ for exotic role playing and ‘identity tourism’ that could be ‘donned and shed without ‘real life’ consequences.’6

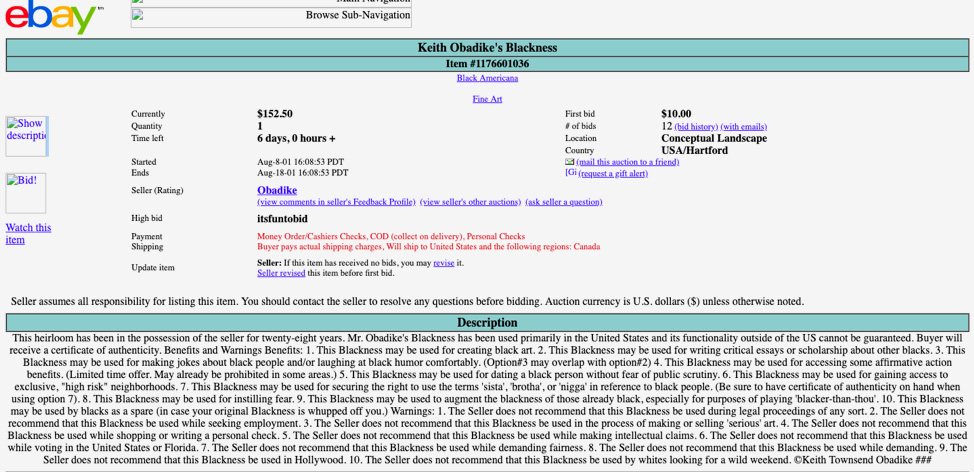

This logic undergirds Mendi and Keith Obadike’s Keith Obadike’s Blackness for Sale (1998), which attempted to sell Obadike’s racial identity on Ebay, before the online auction place stopped the bidding and removed the listing. The Obadikes’ auction of this ‘heirloom’ mocks the idea of detaching or discarding embodied identities and calls attention to how any potential equality in the disembodied cyberspace does nothing to affect the lived realities of the marginalized and oppressed. The description of Obadike’s blackness offers several benefits and warnings for the buyer, for example: ‘This Blackness may be used for making jokes about black people and / or laughing at black humor comfortably;’ ‘This Blackness may be used for gaining access to exclusive, ‘high-risk’ neighborhoods’; ‘This Blackness may be used for instilling fear’; ‘The seller does not recommend that this Blackness be used during legal proceedings of any sort’; and ‘The seller does not recommend using this Blackness while seeking employment.’ The Obadikes’ listing makes the irony of the fluid identities promised by cyber-utopian rhetoric starkly apparent: if casting off embodied identities in cyberspace seems like a liberation it is because of the persistent and unrelenting institutional and systematic oppression mapped onto the bodies of minorities and marginalized groups in the real world, which cyberspace does nothing to remedy. In fact, as Nakamura has argued, identity play online tends to import, essentialize and exacerbate stereotypes, making cyberspace no less liberating or free for people of colour. Despite all the excited claims about a post-race, post-gender era, representation on the early net was not equal, nor was the access to the capital and education necessary to get there. The democracy and equality promised there was primarily for those who already had it.

What makes cyber-utopian claims seem quaint from the present day is not how quickly they were outed, by theorists like Nakamura, as based in the very privileges they claimed to be overturning, or how their post-body politics ended up deploying and re-entrenching stereotypes for entertainment. Rather, it is how aggressively the body has been pulled into digital space to make us knowable and predictable as specific individuals, and how emphatically our data bodies come to bear on our real ones. Indeed we might find our fleshy identities to be more fluid and performative than our digital ones. As it has turned out, the consciously constructed online identity is not the one that represents us in our contemporary moment, nor is it our physical body ‘in real life’. Rather, all of our many selves are shadowed and pre-empted by what artist-activists Critical Art Ensemble named, already in 1995, the virtual self’s ‘fascist twin’: the data body. For the privilege of a self-determining virtual self and access to cyberspace, they write, ‘Payment was taken in the form of individual sovereignty…. With the virtual body came its fascist sibling, the data body – a much more highly developed virtual form, and one that exists in complete service to the corporate and police state.’7 Cyber-utopians, they argue, were, perhaps unknowingly, in alignment with corporate and governmental interests. How could it be otherwise? The decentralized structure of the internet was not an anarchist invention, but one developed by the RAND Corporation as a means to insure continuous and efficient governmental control in the event of total war.8

Our data bodies skulk behind us and race ahead of us as we surf the net or navigate the real world, determining who we are and what we can do. They do not honour us as masters; they turn us into data products to be exported to governmental and corporate consumers. Moreover, algorithms produced by these data-consumers are authoring our lives based on our data bodies. They determine how our neighbourhoods will be policed and how long our prison sentences will be.9 They determine if we will be hired for jobs, what advertisements we will be shown, and what products we will buy and at what price point. They determine if we will pass through airport security. Our data bodies tell authorities – corporate or governmental – what our interests are, what our genders should be, our sexual orientations, our political beliefs, if our faces are wanted (or similar enough to those wanted) by police. Rather than cyberspace allowing us to determine and define our virtual selves, we unwittingly produce evil twins. They are not freed from our physical bodies but attached to them, dragging our meat into cyberspace at the same time as they begin to affect the outside world: pictures of our faces and bodies are scraped from the net and tagged, or collected at border checkpoints with our fingerprints; links our fingers choose to click or how long our eyes linger on a page are logged; we draw maps by moving our mobile phones across geographical space; CCTV knows the location of our cars, or if we have been present at a protest or rally, or if we are a person of some undefined ‘interest’.10 Critical Art Ensemble explains,

The most frightening thing about the data body, is that it is at the center of an individual’s social being. It tells the members of officialdom what our cultural identities and roles are. We are powerless to contradict the data body. It is the word of law. One’s organic being is no longer a determining factor, from the point of view of corporate and government bureaucracies. Data have become the center of social culture, and our organic flesh is nothing more than a counterfeit representation of original data.11

The data body was the dominant twin. It is more important, more defining, now, than even our organic bodies. At every moment in the physical world or when we are in cyberspace, we are nurturing our data bodies. If our promised virtual bodies were consciously performed and potentially liberated, fluid selves, how can we see and get to know our more rigid and ever-growing data bodies that follow us like shadows?

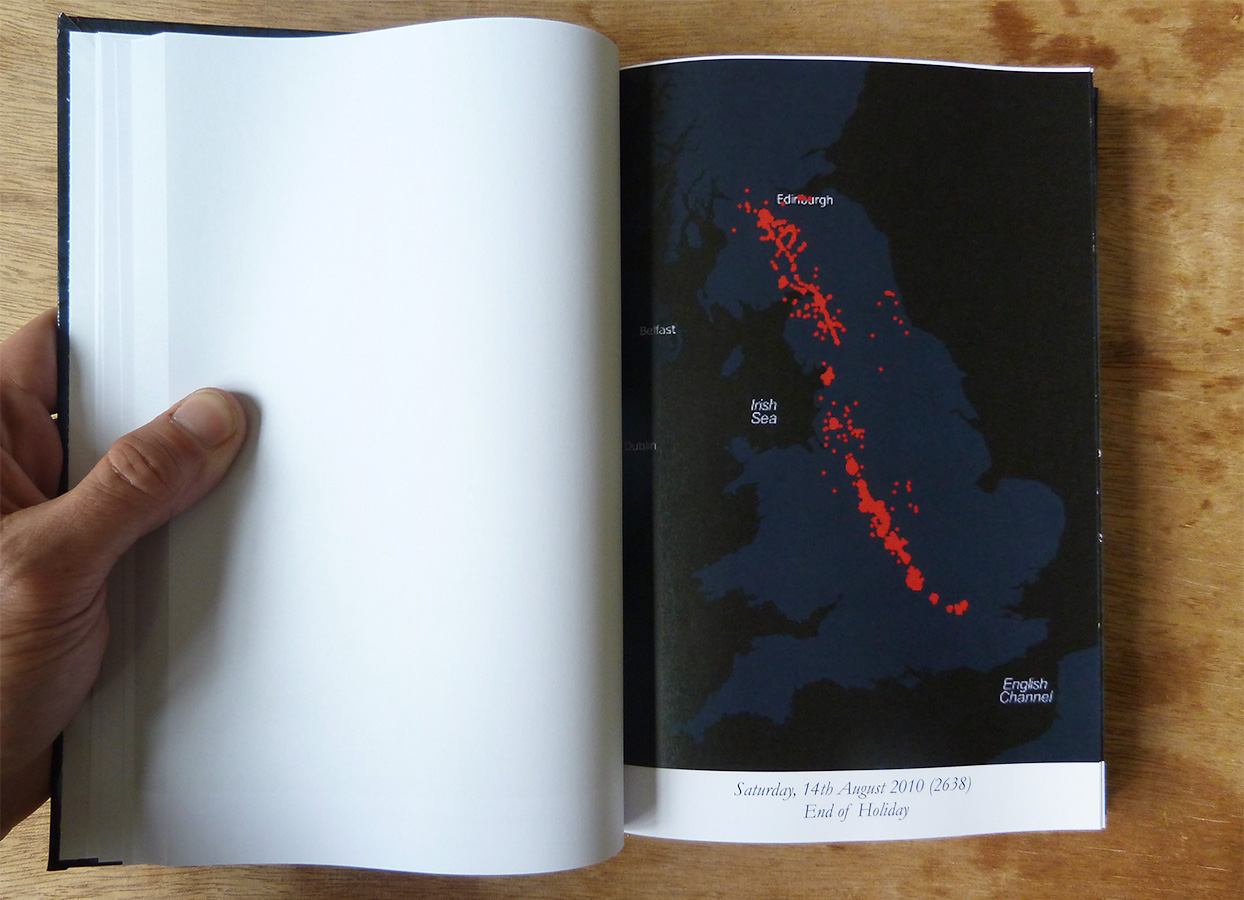

Evil Twins

In 2011, after researchers revealed that Apple’s iPhones surreptitiously tracked and stored the user’s location data, artist James Bridle took his data body out for a walk. Writing scripts to export the information stored in his phone, Bridle produced Where the F**k Was I?, a thick tome of maps plotting his whereabouts for the better part of a year. His data body, he writes, seemed to have a better memory than his own: the map recounts days and trips of which Bridle had no recollection and forced him to reconstruct what he was doing that day to bring his selves into sync.12 This does not mean that the data body is unquestionably true, accounting for a wandering and forgetful figure. These pinpoints are approximations, as anyone watching their blinking dot on a smartphone map knows. It may put you somewhere you are not – your physical body becomes just a range of possibilities ‘cross referenced … with digital infrastructure’.13 It is the plot point, however, that will become fact, and your experience mere memory or hearsay. The data body collapses your physical identity with that of the technology you carry and any of its idiosyncrasies or slight inaccuracies. To prove it, this phantom body, with a warrant, can testify to your whereabouts in court. Fitbits have undermined assault allegations and proven insurance claims.14 Small variations that might distort a single day become solid with repetition and time. Your data body will come to learn your secrets, even those you would not willingly divulge under any compulsion: in 2017 energetic soldiers accidentally revealed the locations of military bases and spy outposts across the world by their repeated jogging routes. Their data bodies traced daily the contours of secret sites and internal architecture. The third-party company that owned this bit of their data bodies released the information without understanding what it revealed.15 Rather than staying safely in cyberspace, the data bodies crawl out to create real-world effects. Instances like this reveal our data bodies, and show that while they may be based on our physical bodies, movements and characteristics, we do not own or control them. Bridle’s maps purport to show us where he has been, or at least, where his phone has been – but no: they are the partial portrait of that shadow other who, like those of the soldiers, has come to be more real and consequential to the structures of power than even oneself. The physical fitness of the soldiers and where their real bodies go are of little significance; it’s their data bodies and where they have been that matter.

Gibson’s console cowboys may have thought the body was a prison of flesh, but data has become a prison of the body. ‘More than ever before’, Zach Blas writes:

[I]nformation is generated from bodies through a multitude of devices that proliferate globally. To move through physical space is also to traverse the sprawl of CCTVs; online activity is subject to the incessant aggregation of dataveillance; smartphones exude geo- traces that are waiting to be mapped, now and in the future; commercial and governmental services alike necessitate the surrender of personal data; while contemporary bureaucracies, with the aid of management software, transform the body and its activities into a perpetually fertile site for the increasingly precise documentation of life.16

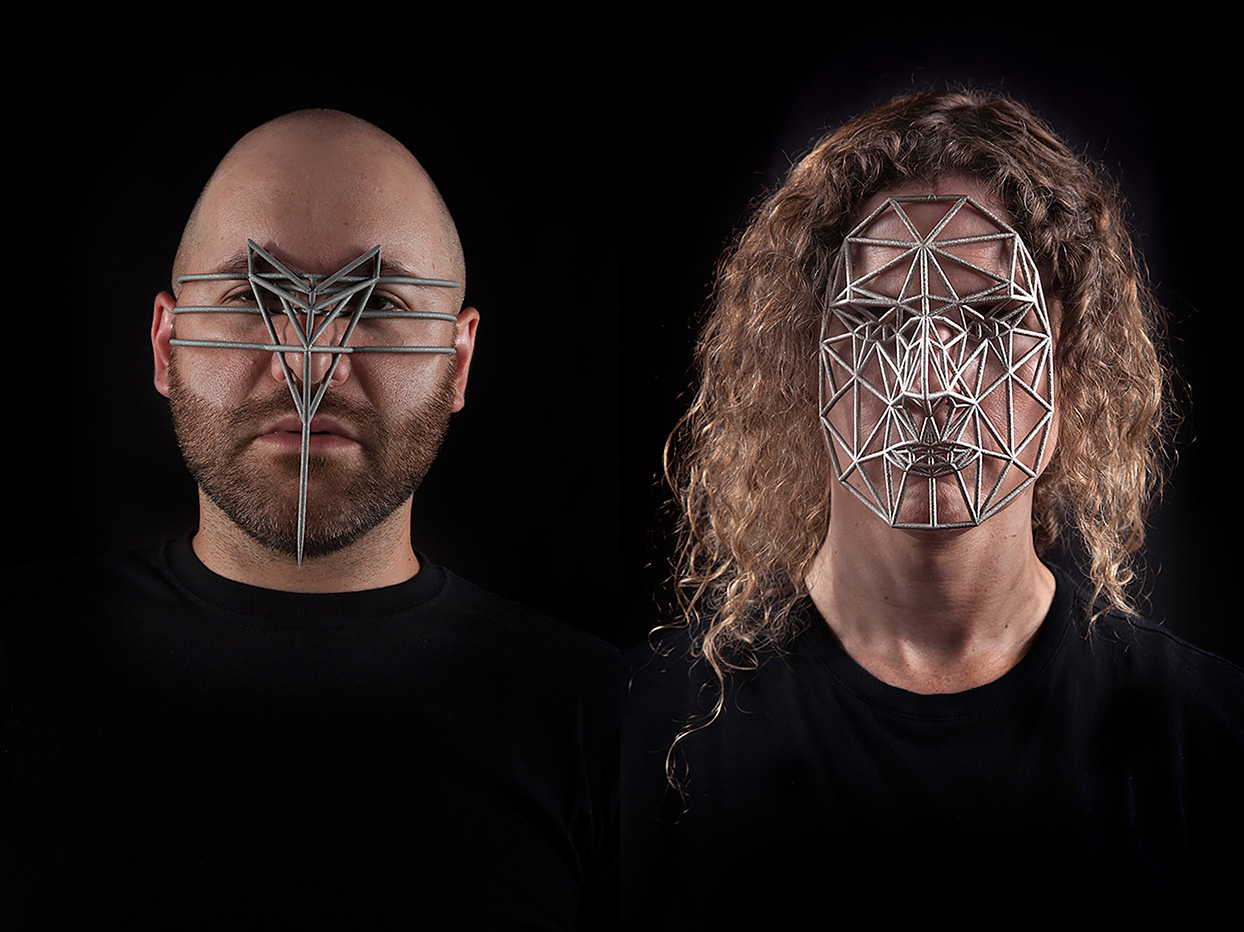

Blas’ pinching, painful Face Cages (2013–16) export the diagrams that map the significant features of our faces, those that will be used to compare us against databases of mugshots and surveillance footage, into metal mesh-works that literalize the virtual traps that ensnare our physical bodies. Blas transposes the data body onto the physical one and makes visible this shadow world and its place in the legacy of older technologies of domination and capture, such as shackles and prison bars,17 just as the Obadikes’ auction of blackness references the slaver’s bay. The endurance performances Blas produced of queer artists and collaborators wearing their bespoke Face Cages give a glimpse of what it would look like if we could see our data bodies and feel the pressure they exert upon our flesh. The biometrics of queerness, as with any other neo-phrenology, Blas points out, is terrifyingly suspicious. AI facial recognition algorithms have been trained to predict sexual orientation or political views.18 ‘What could be the benefits of proving to the world that such a recognition apparatus exists?’, asks Blas.19 At best, we become targets for more precise advertisements and political messaging. As civil rights protections for gender identity are under threat in the United States, and as authoritarian powers rise around the globe, it is easy to imagine even more sinister uses of data bodies, such as China’s tracking of Uighurs.20

Blas’ Face Cages are not masks that obscure, but ones that essentialize and define us. Despite ‘democratically’ subjecting all people to capture, data bodies do not represent equally. Just as the utopian fantasy of the virtual body imagined race and gender into irrelevance, the same inherent biases in technology development have led to software and hardware that do not capture all bodies with the same degree of accuracy. Rather than this creating to a measure of anonymity or privacy for minority persons, the inaccuracies cause friction for movement through the world. For example, facial recognition technology tends to misidentify dark-skinned faces at a much higher rate than light ones, which in turn create false positives as wanted faces are matched against CCTV footage or government repositories of photographs from driver’s licenses, passports and visa applications.21

Transgendered and nonbinary travellers consistently appear as security risks at airport checkpoints because of how their bodies, and the less rigid identities promised by cyber-utopians, fail to align with the strict definitions desired by body scanners.22 Their physical features become ‘anomalies’ that do not sync with the requirements of the data bodies that govern our lives and insure our privileged passages through the physical and virtual worlds. They are produced by our body’s collaboration with data, but they also precede, pre-empt and police us.23

Exorcize Machines

This is the part of the essay where one wants to find suggestions for how to remedy our post-utopian cybertrap or which would point to practices that do more than make visible our data bodies and their shadow presences. None, however, are forthcoming, as I am not sure what they are. We might follow Tega Brain and Surya Mattu’s lead and create Unfit Bits (2015), devices that attempt to game our data bodies by spinning them silly. Simple machines, such as metronomes, wheels and pendulums jokingly simulate the workouts required for insurance discounts, while the user does other things – perhaps, as their promotional video suggests, more work at the computer, play video games or drive a cab.24 These are charming objects but they offer little escape. They create noise and register frustration and displeasure, but we would be foolish to think our data bodies so dim. They know if you are the type to simulate a workout, especially if you are simultaneously on the computer, or playing a networked game, or picking up ride-share passengers. Rather, what we need now are exorcize machines. Bridle and Blas make our possession by shadow others visible; they illustrate how with every action we unknowingly create doubled selves, in addition to and in excess of any conscious choices we make about how we present or construct our identities online. Creating noise or going dark are options, but they are not likely to liberate us from the dominance of the data body, which will not be befuddled or starved off. Such behaviours will only produce informatic aberrations likely to slow or impede our movement through data and physical space.25 We need new ideas, tactics to exorcize our data bodies, to get them out in the open and wrest some of the promised agency and self-determination promised back from them.

1. John Perry Barlow, ‘Declaration of Independence of Cyberspace’, Electronic Frontier Foundation, 8 February 1996, eff.org, published on the occasion of the ‘24 Hours in Cyberspace’ event. Then US Vice President Al Gore was another contributor to the event. It should be noted that the political impetus for Barlow’s declaration was the passing of the 1996 Telecommunications Act. While the bill itself aimed to deregulate ownership of the internet by opening it up to competition (the effect was actually the opposite), it also included the Communications Decency Act, which sought to regulate obscenity and pornography on the internet. This section of the bill was later struck down as a violation of First Amendment guarantees of free speech.

2. Gibson first uses the term ‘cyberspace’ in his 1981 short story ‘Burning Chrome’ and more fully develops it in his influential novel Neuromancer (1984). William Gibson, ‘Burning Chrome’, Burning Chrome (New York: EOS / Harper Collins, 2003), p. 168.

3. William Gibson, Neuromancer (New York: Ace / Penguin Random House, 2018 [1984]), p. 5.

4. See Sherry Turkel, Life on the Screen (New York & London: Simon and Schuster, 1995), p. 12.

5. Lisa Nakamura, ‘Cybertyping and the Work of Race in the Age of Digital Reproduction’, New Media Old Media, ed. Wendy Hui Kyong Chun and Thomas Keenan (New York & London: Routledge, 2006), p. 319.

6. Ibid., p. 323.

7. Critical Art Ensemble, ‘Appendix: Utopian Promises – Net Realities’, Flesh Machine (Brooklyn: Autonomedia, 1998), pp. 144–45.

8. Critical Art Ensemble describes the cyber-utopian ideology as one that represents ‘the most repressive apparatus of all times’, the internet, ‘under the sign of liberation’. Ibid., p. 143. See also Paul Baran, ‘On Distributed Communication’, RAND Memorandum RM-3420-PR, August 1964, rand.org.

9. See Tom Simonite, ‘Algorithms Should Have Made Our Courts More Fair. What Went Wrong?’, Wired, 5 September 2019, wired.com; Caroline Haskins, ‘Dozens of Cities Have Secretly Experimented with Predictive Policing Software’, Vice, 6 February 2019, vice.com.

10. James Bridle, ‘How Britain Exported Next-Generation Surveillance’, Medium, 18 December 2013, medium.com.

11. Critical Art Ensemble, ‘Appendix’, p. 146.

12. James Bridle, ‘Where the F**k Was I? (A Book)’, booktwo.org, 24 June 2011, booktwo.org.

13. Ibid.

14. Miles Snyder, ‘Police: Woman’s fitness watch disproves rape report’, ABC News, 19 June 2015, abc27.com; Parmy Olson, ‘Fitbit Data Now Being Used in the Courtroom’, Forbes, 16 November 2014, forbes.com.

15. Alex Hern, ‘Fitness Tracking App Stravia Gives Away Location of Secret US Army Bases’, The Guardian, 28 January 2018, theguardian.com.

16. Zach Blas, ‘“A Cage of Information”, or, What is a Biometric Diagram’, Documentary Across Disciplines, ed. Erika Balsom and Hila Peleg (Cambridge, MA: MIT Press, 2016), p. 83.

17. Blas, Face Cages (2013–1206), zachblas.info.

18. Sam Levin, ‘Face-reading AI will be able to detect your politics and IQ’, The Guardian, 12 September 2017, theguardian.com; Sam Levin, ‘New AI can guess whether you’re gay or straight from a photograph’, The Guardian, 7 September 2017.

19. Zach Blas, Facial Weaponization Suite: Communiqué: Fag Face (2012), zachblas.info.

20. Marisa Iati, ‘Supreme Court, set to rule on LGBTQ rights at work, addressed gender discrimination 30 years ago’, Washington Post, 8 October 2019, washingtonpost.com; Paul Mozur, ‘One Month, 500,000 Face Scans: How China is Using AI to Profile a Minority’, New York Times, 14 April 2019, nytimes.com.

21. See Steve Lohr, ‘Facial Recognition is Accurate, You’re a White Guy’, New York Times, 9 February 2018, nytimes.com; Russell Brandom, ‘Amazon’s facial recognition matched 28 members of Congress to criminal mugshots’, The Verge, 26 July 2018, theverge.com; Sam Levin, ‘More than half of US adults are recorded in police facial recognition databases, study says’, The Guardian, 18 October 2016, theguardian.com.

22. Allison Hope, ‘The trauma of TSA for transgender travelers’, CNN, 17 October 2019, edition.cnn.com.

23. Joy Buolamwini’s research, in particular, has exposed the consistent racial and gender biases produced through data bodies and how their misalignment with the physical body turns virtual information into real world problems. Joy Buolamwini, ‘Artificial Intelligence Has a Problem with Gender and Racial Bias. Here’s How to Solve It’, Time Magazine, February 7, 2019, time.com.

24. Tega Brain and Surya Mattu, Unfit Bits. unfitbits.com.

25. See, for example, Janet Vertesi’s experiment to see if she could keep her data body from discovering that she was pregnant. The lengths she had to go to in order to keep this secret made her appear like a criminal. Janet Vertesi, ‘My Experiment Opting Out of Big Data Made Me Look Like a Criminal’, Time Magazine, 1 May 2014. time.com.

Kris Paulsen is an art historian and media theorist. She is Associate Professor in the Department of History of Art and Film Studies Program at The Ohio State University. She teaches contemporary art history with a focus on time-based media. Her research and writing addresses the intersections of art and technology from the 1960s to the present. She is the author of Here / There: Telepresence, Touch, and Art at the Interface (MIT Press, 2017). Her current research project, ‘Against Algorithms (or The Arts of Resistance in the Age of Quantification)’ addresses the logics of quantification and algorithmic structures in contemporary art, culture and activism.